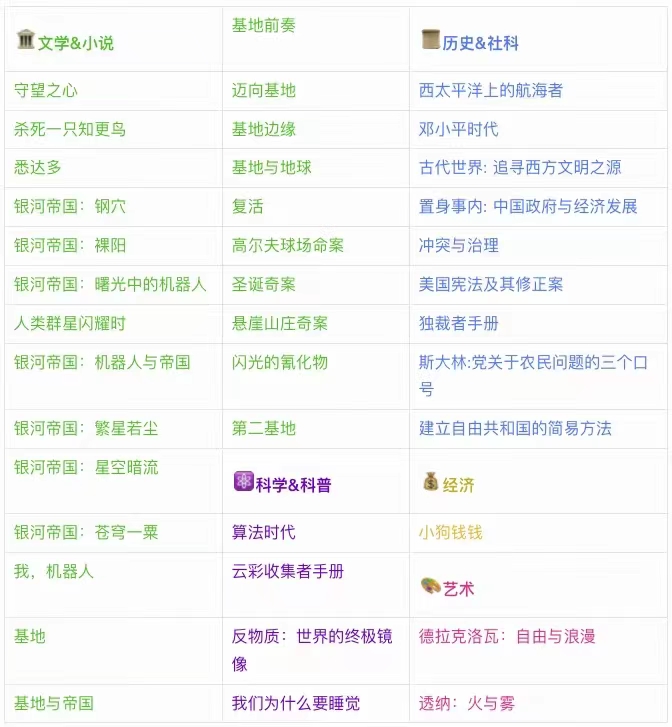

Resampling and importance-weighting

What is the Effect of Importance Weighting in Deep Learning?

Jonathon Byrd & Zachary C. Lipton

CMU

ICML 2019

We present the surprising result that importance weighting may or may not have any effect in the context of deep learning, depending on particular choices regarding early stopping, regularization, and batch normalization.

Condition: Deep Model / Classification / optimizer SGD

Conclusion

- L2 regularization and batch normalization (but not dropout) interact with importance weights, restoring (some) impact on learned models.

- As training progresses, the effects due to importance weighting vanish.

- Sub-sampling the training set instead of downweighting the loss function, does have a noticeable effect on classification ratios. We find that weighting the loss function of deep networks fails to correct for training set class imbalance. However, sub-sampling a class in the training set clearly affects the network’s predictions. This finding indicates that perhaps sub-sampling can be an alternative to importance weighting for deep networks on sufficiently large training sets.

- Effects from importance weighting on deep networks may only occur in conjunction with early stopping, disappearing asymptotically.

Survey of resampling techniques for improving classification performance in unbalanced datasets

Ajinkya More

UMICH

arxiv

Methods & Results

Here use the precision on majority class (L) and recall on minority class(S). The results are listed after the simple introduction of the following methods (Precision/Recall):

- Baseline LR (0.90/0.12)

- Weighted loss function: weights inversely proportional to the class sizes (0.98/0.89)

- Undersampling methods:

- Random undersampling of marjority class (0.97/0.82)

- NearMiss family of methods perform undersampling ofpoints in the majority class based on their distance to other points int the small. In the NeraMiss-1, those points from L are retained whose mean distance to the k nearest points in S is lowest where k is a tunable hyperparameter (here k=3). (0.92/0.32)

- NearMiss-2 keeps those points from L whose mean distance to the k farthest points in S is lowest (k=3). (0.95/0.60)

- NearMiss-3 selects k nearest neighbors in L for every point in S (k=3). (0.91/0.20)

- Condensed Nearest Neighbor (CNN) will choose a subset U of the training set T such that for every point in T its nearest neighbor in U of the same class. (0.93/0.39)

- Edited Nearest Neighbor (ENN) undersampling of the majority class is done by removing points whose class label differs from a majority of its k nearest neighbors (k=5). (0.95/0.59)

- Repeated Edited Nearest Neighbor, the ENN algorithm is applied successively until ENN can remove no further points. (0.97/0.80)

- Tomek Link Removal. A pair of examples is called a Tomek link if they belong to different classes and are each other’s nearest neighbors. Remove these point or only remove the part of L. (0.91/0.21)

- Oversampling methods:

- Random oversampling of minority class. (0.97/0.76)

- SMOTE (0.97/0.77)

- Borderline-SMOTE1 (0.97/0.78)

- Borderline-SMOTE2 (0.97/0.80)

- Conbination methods:

- SMOTE + Tomek Link Removal (0.97/0.80)

- SMOTE + ENN (0.97/0.92)

- Ensemble methods:

- Easy Ensemble: sample a list of subsets (Li) of L so that |Li|=|S|, and use these subset to train a list of methods and Use AdaBoost to ensemble them. (0.98/0.88)

- Balance Cascade: BalanceCascade is similar to EasyEnsemble except the classifier created in each iteration influences the selection of points in the next iteration.(0.99/0.91)

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 陆陆自习室!

评论